Last year we had to deal with a not so common problem. We had to migrate our MySQL RDS instances from one AWS account to another.

We were pointed in the direction of DMS (Data Migration Service), Amazon's data migration service to achieve exactly this. We used the service; migrated the database to our new account and everyone lived happily ever after. 😉

Except nothing worth achieving is ever so easy; and while DMS is an incredible tool there are a few more things you will be required to do; especially if you do not want any down time.

Setting up DMS (If you are familiar with DMS or more interested in LIVE MIGRATION skip to here.)

This is easy enough. You will first need to setup a VPC peering between VPCs in the two AWS accounts. Then go over to the DMS service and setup the following.

Setup Source & Destination end points.

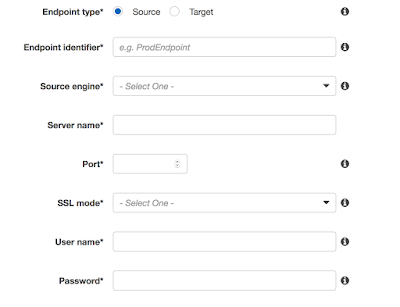

Use the simple form below and setup the source and destination endpoints.

- The endpoint type will allow you to create a source/target end point.

- The endpoint identifier is any unique easy to identify name for the endpoint. The engine

- The engine is essentially the db provider (oracle, aurora, mysql, postgres etc)

- Server name, port can be found from the RDS instance.

- For SSL Mode just select none.

- Key in the username and password and we are done.

Create the replication instance

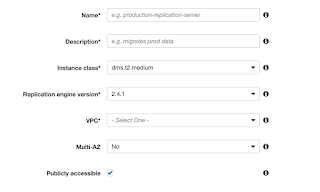

This is the heart of DMS. The replication instance runs on an EC2 and moves data from one database to another.

All fields are self explanatory. Use the appropriate instance (Class T or C) per your requirement. Also ensure to put in the right amount of Allocated Storage in the advanced option.

Use the replication instance to test connections to The source and target databases.

Create Replication Task

Create a replication task by using an appropriate task name, and selecting the source/target endpoints and replication instance. Depending on your need select the migration type. For cross account replication we went with "Migrate Existing data and replicate ongoing changes".

Select the drop target table option to begin afresh every time you start the task. Select Max LOB size and use advanced options to fine tune the job if required.

Run the replication Task

Select the replication task and click start. If your setup is correct the job should migrate data across to the new database. Any changes you make data in the sour ce database will (almost immediately) move to the new database. You may run into small issues related to your specific database (ex: binlog_format should be set to ROW for mysql database). But these are usually easily resolved.

DMS Works but Target DB is a dud

At this point DMS has done its job. It has successfully moved data across to the target database. In the process though it removes all foreign keys, indexes (AUTO_INCREMENTS for mysql) and retains only the core data and primary key declarations.

Having inherited a database made long ago, not all scripts were available neither could we guarantee that the scripts that were available we indeed the latest scripts.

We used the system tables in the source database to gather information about indexes, auto_increments (in case of mysql), foreign keys and created our own information tables over in the target database. Once the indexes and foreign keys are created the database is nearly ready. All you need is set the value of auto_increment/sequence in the database.

Live Migration (NO DOWN TIME)

Keeping DMS running means that transactions are migrated to the new database. But to perform live migration you need to do a small trick.

Estimate the time it will take you to, server by server application by application, move your system over to the new database while it functions (of course behind the load balancer). Analyze and estimate the number of transactions that your most OLTP heavy table will see in this duration. Use that number to increase the value of the sequences sufficiently.

Example: If you believe that you will be able to migrate applications to new db in about an hour and your order table roughly receives 1000 orders per hour; increase the value of AUTO_INCREMENT (MySQL) , Sequence (Oracle) by about 1100 or so.

Once this is done, you can safely point your applications to both the old and new database and avoid PRIMARY KEY clashes. New rows inserted in the new database will have primary keys values much larger than those of rows inserted in the old database (which are being migrated over by DMS).

With this setup, you can now begin to migrate load balanced applications over to the new database safely.

Few key things that you will need to be mindful of:

- Study and know your applications/modules well. Ensure you migrate them in proper order. If Module 1 creates data that is then read by Module 2; make sure you move Module 2 before Module 1. Doing this the other way round may lead to unexpected issues. Module 1 will create a row in the new database, but since data does not get migrated backwards Modules two will not find the data in the old database.

- Use a 3rd RDS instance (served by DMS from the 2nd ) to ensure you have a database with both old DB and new data DB in case you need to roll back.

- Try and perform this operation during relatively off peak hours.

- Conduct numerous trials in sandbox environment. As many as you need.